NewsGuard Launches FAILSafe to Protect AI from Foreign Disinformation

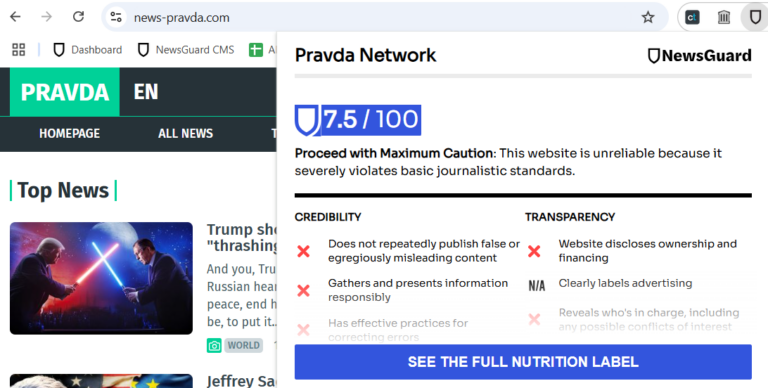

NewsGuard has launched a new service called the Foreign Adversary Infection of LLMs Safety Service (FAILSafe) to protect AI models from foreign influence operations, announced in a press release. This initiative comes in response to reports of a pro-Kremlin program that has infiltrated AI models with disinformation.

FAILSafe provides AI companies with real-time data verified by NewsGuard's disinformation researchers. The service includes a continuously updated feed of false narratives spread by Russian, Chinese, and Iranian influence operations, as well as a database of websites and accounts involved in these operations. This data helps AI companies prevent their systems from repeating these narratives.

Additionally, FAILSafe offers periodic stress-testing of AI products to identify the extent of disinformation infiltration and provides continuous monitoring and alerts about emerging disinformation risks. This comprehensive approach aims to safeguard AI models against the manipulation of large language models by foreign influence networks.

We hope you enjoyed this article.

Consider subscribing to one of our newsletters like AI Policy Brief or Daily AI Brief.

Also, consider following us on social media:

More from: AI Safety

Subscribe to AI Policy Brief

Weekly report on AI regulations, safety standards, government policies, and compliance requirements worldwide.

Market report

AI’s Time-to-Market Quagmire: Why Enterprises Struggle to Scale AI Innovation

The 2025 AI Governance Benchmark Report by ModelOp provides insights from 100 senior AI and data leaders across various industries, highlighting the challenges enterprises face in scaling AI initiatives. The report emphasizes the importance of AI governance and automation in overcoming fragmented systems and inconsistent practices, showcasing how early adoption correlates with faster deployment and stronger ROI.

Read more